HDR Explained for Regular People

Danger: This post is pretty old and may not be relevant anymore.

What is HDR?

HDR stands for High Dynamic Range. When an HDR source like a game or video is displayed on an HDR TV, there’s a much bigger difference between dark stuff and light stuff than there is on a regular TV. This dramatically increased contrast makes the whole image appear closer to how we actually see the world and thus more lifelike.

Is HDR the same as 4K?

Nope. 4K we’re talking about resolution, HDR about contrast. Though 4K is about more than just resolution.

Is HDR a big deal?

Yes.

Is HDR the next 3D?

No.

What’s the difference between HDR and what I have now?

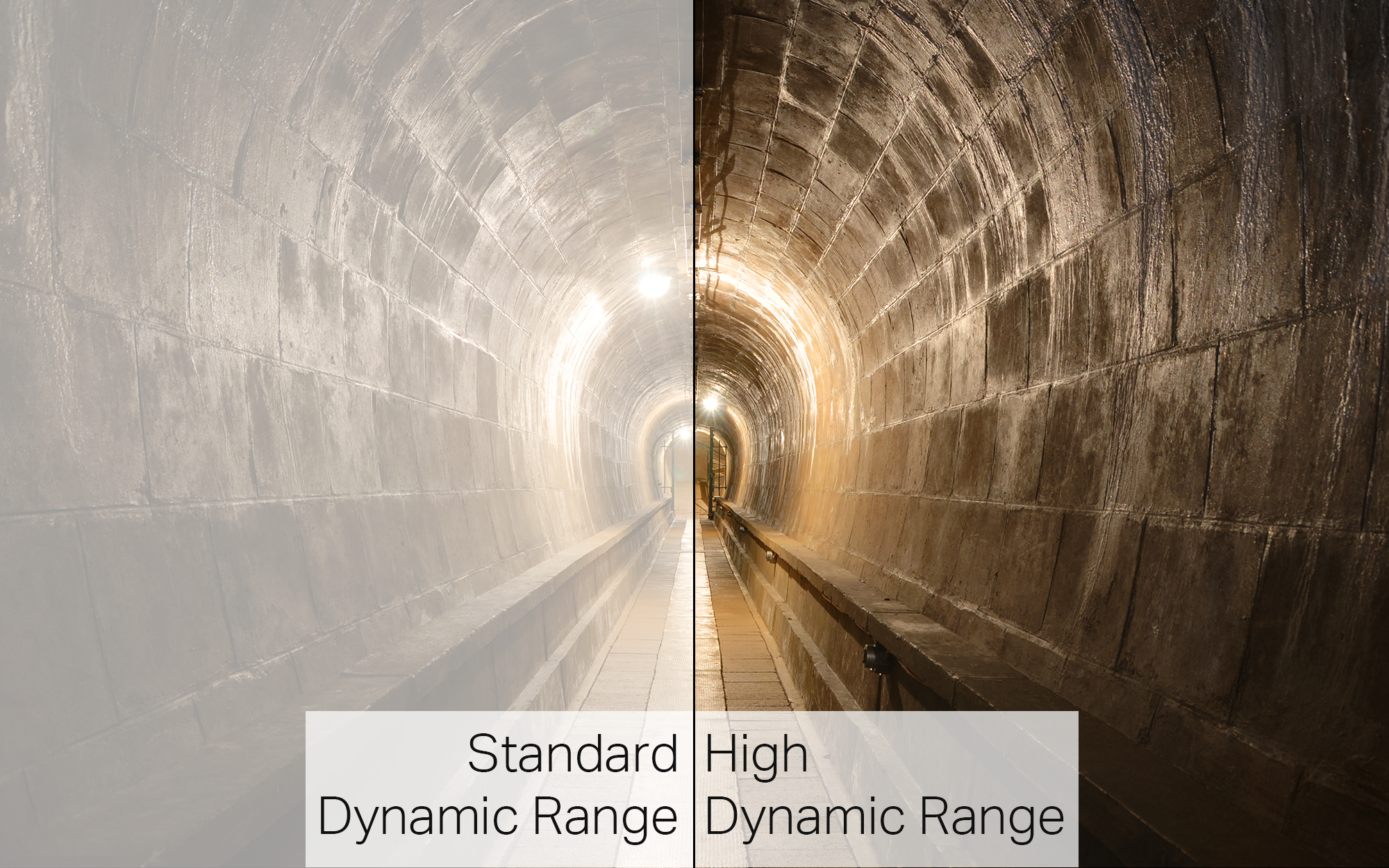

If you put an HDR TV next to your regular one, it’ll look roughly like this.

It just looks brighter.

I know, everybody on Twitter says the same thing. Unfortunately it’s hard to show because the screen you’re reading this on is (probably) not capable of HDR. So this is a standard dynamic range (SDR) image trying to show you the difference between SDR and HDR. Basically, the left side has a much narrower range between black and white, while the right you can see a lot more difference between the shadows and highlights. That’s what we’re going for: contrast.

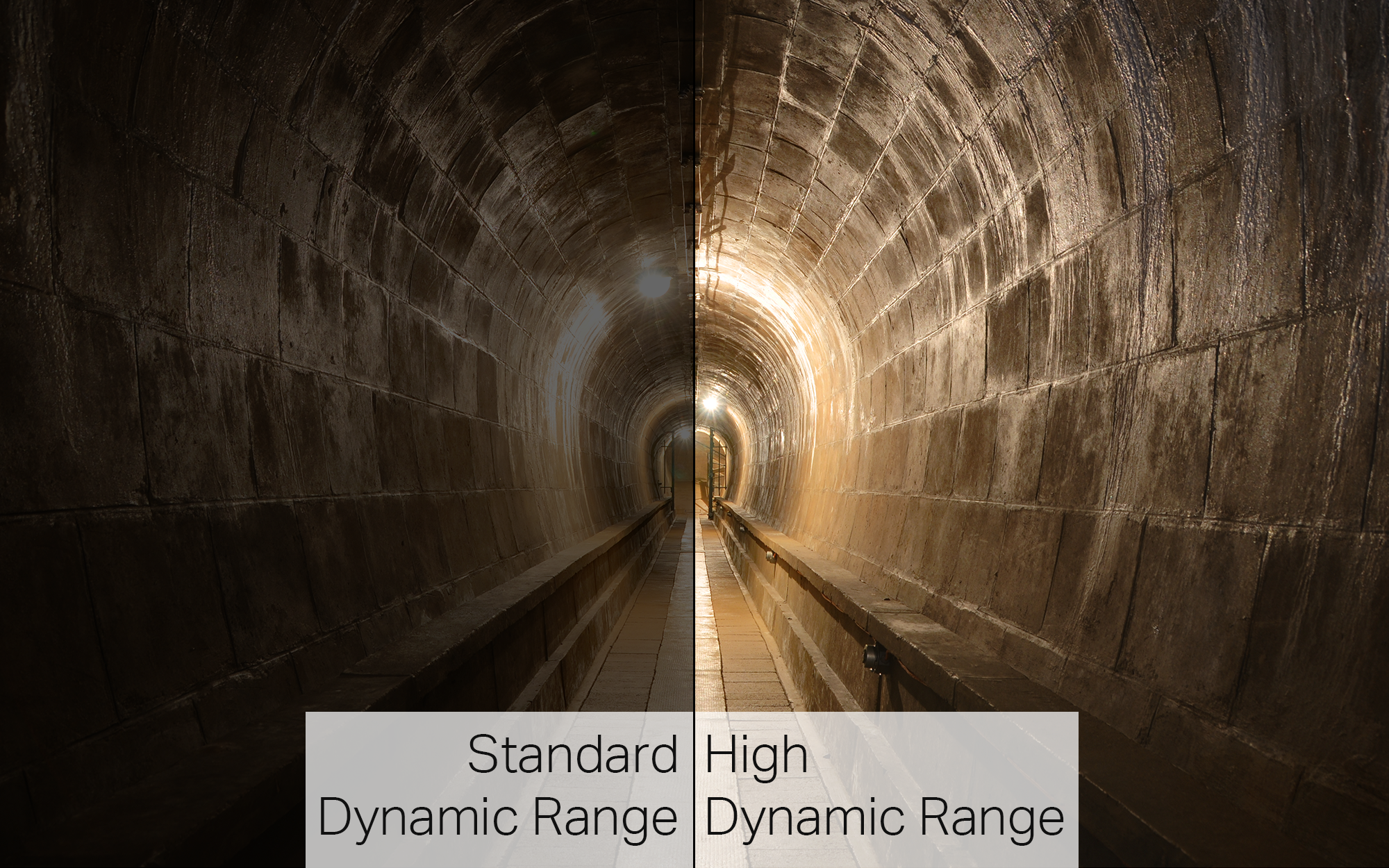

Here, try this one. This is what the difference would look like if you magically matched the brightness of both TV’s. See? Significantly less contrast on the SDR side.

Are there different kinds of HDR?

Yep. There are 3 you need to concern yourself with right now:

HDR10 - Open standard

- 10-bit depth

- One HDR setting for the whole piece of content

- Requires HDMI 2.0a+ (and HDCP 2.2 for protected content like Blu-rays)

- The required standard for HDR on Ultra HD Blu-rays

- Not backwards-compatible with SDR displays

Dolby Vision - Closed standard by Dolby (TV makers have to pay to include it)

- 12-bit depth

- HDR settings can change throughout the content (to optimize the HDR scene to scene)

- Requires HDMI 1.4+ (and HDCP 2.2 for protected content)

- Optional on Ultra HD Blu-rays

- Can be backwards-compatible with SDR displays

HLG (technically called ARIB STD-B67 but that’s too much to say) - Royalty-free standard by the BBC and NHK

- 10-bit depth

- No settings (designed to make transmission simpler), so no per-scene optimization

- Pretty sure requires HDMI 2.0a+ (checking on this to confirm 100%)

- Not part of Ultra HD Blu-ray spec

- Can be backwards-compatible with SDR displays

HDR10 and Dolby Vision are the main ones in the American consumer discussion – HLG is being pushed by international broadcasters (namely NHK and the BBC).

Which one’s the best? Will we all standardize on one?

It’s still being debated, but I’d bet we’ll end up with more than one. HDR10 seems to be the baseline HDR standard that web delivery and discs are supporting, with Dolby Vision as a premium experience with less widespread support. But what will broadcasters and cable companies do? Dunno yet. That’s why I put HLG on this list – while it isn’t big in the consumer discussion right now, if it gets adopted as our new HDR broadcast standard, it’ll get important real fast.

What are the marketing-speak terms for HDR?

This is probably not complete, because it’s hard to keep up with the made-up names marketing people use. But here goes:

HDR Pro = LG’s name for HDR10.

HDR Super Plus Dolby Vision = apparently LG’s name for Dolby Vision just has more words thrown in there for no reason

Super UHD = Samsung’s label for their TV’s that have DCI P3 color gamut and >1,000 nit peak brightness, as well as some other fancy imaging features.

HDR 1000 = Samsung label for TV that can go up to 1,000 nits peak brightness.

HDR Premium = I think this is just Ultra HD Premium worded stupidly, saw it on a Fry’s ad.

Ultra HD Premium = a certification by the UHD Alliance, means the TV supports 4K, HDR (doesn’t specify which implementation though), and wide color gamut.

X-tended Dynamic Range Pro, Peak Illuminator Ultimate, Ultra Luminance, Dynamic Range Remaster = 2015 buzzwords that appear to be deprecated now that we have real standards to point to.

Which TV should I buy?

This changes almost daily, so I’m not going to pick one. But wait as long as humanly possible. Personally I’m not buying anything until at least after CES 2017, maybe longer.

What aside from the display needs to be HDR compatible?

Literally everything in the chain. Source and TV obviously, but also anything the signal flows through to get to the TV – this means splitters, receivers, converters, anything. In the case of a 4K HDR10 source, this means that everything has to support HDMI 2.0a AND HDCP 2.2. So be very careful, this is still a pretty big gotcha for a lot of people.

Will I need to buy new HDMI cables to use an HDR TV?

Technically you need a “High Speed” HDMI cable. Can your current cables do 1080p? If so, they’re High Speed, and you’re fine.

The one big exception is if you’re doing very long runs over HDMI – if you’re already at the hairy edge of HDMI connection with 1080p (which usually happens somewhere ~30-40ft with really good cable), adding any amount of data to the signal will probably kill you. Use shorter cables, or invest in something designed for long runs.

Can you get an HDR 4K TV or are they mutually exclusive?

HDR is independent of resolution. There are specs for HDR 1080p, as well as 4K and 8K, but those resolutions can also be SDR. So it’s possible for a TV to be 4K and not HDR, or HDR and not 4K. That said, it’s very unlikely anyone will release a 1080p-only HDR TV. And there is no HDR spec for 1080i or 720p.

Will a standard Blu-ray support HDR? Or will it be a separate product?

There’s a specific Blu-ray version called Ultra HD Blu-ray that supports HDR, specifically HDR10 (mandatory) and Dolby Vision (optional). Standard Blu-rays do not support HDR. UHD Blu-rays have different packaging so they’re easy to spot, see below:

Is it more useful/valuable/impactful for games or movies?

All depends on how the creator uses it. Is HD more useful/valuable/impactful for one vs the other? Anything that makes the image better is going to be good for all images – rising tide and boats, etc etc.

If I just bought a new TV is there a link I can go that will tell me the best way to set all the video settings?

Woof, can of worms here. There are technically accurate ways to set up a TV, similar to how we set up production monitors. But this involves special sensors and expensive software, so I don’t recommend it for most people. I’d say check AVS Forum for fellow HDR nerds, see how they set up their TV’s, and use that as a starting point for yours.

And really, while there are technically accurate ways, there’s no “right” way to set up your TV at home. I can hear other broadcast engineers howling at this, but they can shut up. You paid for a nice TV, set it up so that content on it looks great to you. You don’t have to impress SMPTE, it’s about you and your viewing experience in your specific environment.

Real nerdy math/engineering analysis on data output size, 10-bit color depth, workspace changes, etc.

I’m going to punt on this one, as 1. my goal here was a general overview, and 2. some people much smarter than me have already written a lot of great technically-detailed stuff. For more technical reading, written by people smarter than me, check out these links:

HDR: What It Is and What It Is Not